- 14 min to read

Jamstack SEO Guide

Whether you are running a Jamstack website or not, this is a comprehensive overview of everything you need to know to succeed at SEO.

Two years ago, we released this guide with a couple of goals in mind.

First of all, we wanted to rank high for a keyword Jamstack SEO (and we’ve succeeded at that since we were #1 and #2 at any given time last year. BTW, our examples at the end of the post explain how we did it). And SEO guide (which we, as expected, fluked totally, and once you read the whole guide, it should be clear why).

Second, we hoped to shed some light on SEO so Jamstackers can understand the often-overlooked importance and the best practices they can utilize for them and their clients. 1k downloads of the pdf version of the guide indeed point that we did that as well.

Two years is a lot of time, and a lot has changed in the world of SEO and Jamstack. The thing is, it’ll change even more come May 2021 (I’ll get to that in a minute), so now is the perfect time to re-write and update this guide so you guys can act on time.

So. let’s get started.

I’ve added my main keywords to the first paragraph, within the first 100 words. Furthermore, if you look at the page source code, you’ll see TITLE length is between 55-70 characters or 570pixels long (including spaces) and meta DESCRIPTION length between 120 and 160 characters with excellent descriptive CTA.

The tips above👆 and throughout this post are not the only things that make SEO what it is nowadays.

Though still important, SEO has grown out of simple keyword placement and TITLE and META description tag optimization. There are at least hundreds of ranking factors (if not more) that Google uses to rank pages. However, we do not know the exact importance/value they have in ranking algorithms.

And that’s not all.

Fierce competition, educated searchers, frequent algorithm updates, and a crazy number of new features in search results (only 2.4% of all Google search results don’t contain at least one SERP feature - link) have made SEO more complex than ever before. Not to mention high expectations from the clients.

Nowadays, if you want to do SEO properly, you have to think beyond the desired keyword, title, and other tags. You also have to take branding, audience behavior, search intent, user experience, backlinks, analytics, and competition into consideration too. And from May 2021 page experience signals embodied in or led by Core Web Vitals.

It's not a one-time thing anymore.

And the traffic gains from SEO have changed. Take a look at keywords with feature snippets, for example. According to Ahrefs’ research data, 12.29% of search queries have featured snippets in their search results.

In those search queries, earning the #1 spot in organic search will bring you less traffic and a lower click-through rate than a few years back for the same spot in the query. Whatmore earning a featured snippet doesn't guarantee decent traffic either.

Even though Google is referring less outgoing traffic to websites than before, it is still the number #1 source of traffic for most websites. With that being the case, digging deeper into SEO might be more important for developers and marketers than ever before.

And, believe it or not, having a Jamstack website helps a lot.

Why don't you join our Jamstack newsletter?

Sign up and be the first to know about our exclusive articles and free resources.

What is SEO (search engine optimization)?

H2 tag for subtitles with a slightly different keyword in it (you’ll see this often throughout the post) because who knows, maybe the big G picks it up and shows it in the People Also Ask (Related Questions) box.

According to Web Almanac, Search Engine Optimization (SEO) is the practice of optimizing websites’ technical configuration, content relevance, and link popularity to make their information easily findable and more relevant to fulfill users’ search needs.

Speaking in layman's terms, SEO’s whole purpose is to help your content rank better in search engines for desired/targeted keywords and get under the spotlight of your potential audience. In practice, you are trying to get your content in the top 5 spots of the first page as they get 67.6% of all the clicks coming from that search result page.

With 91.86% of the market share, Google Search is the global search engine market’s undeniable leader. Most guides refer to tips/tricks/tactics that can help you rank better in Google. It is the same with this guide.

As I already mentioned, SEO today goes beyond page optimization, and it can be broadly divided into two parts: ⚙️ technical SEO and 📄 content SEO.

Let’s dive into the best practices and see where the Jamstack fits.

Technical SEO

Don’t be lazy, and check out the source code once more. You’ll see our HTML code for the above image as:

Keywords in the image name and alt tag. The goal here is to provide useful, information‐rich content with appropriately used keywords and the context of the page/section’s content. And help your image appear in Google image search for your targeted keyword._

A huge subject on its own, technical SEO helps your content play a central role in SEO. It focuses on making sure your website gets better visibility and creates a better web experience for your users and search engines.

Basically, with technical SEO, you are tackling: web performance, indexing, and crawlability.

Web Performance

H3 for this subtitle. Google and other search engines use H tags to understand the on-page structure of the text. With this topic being a part of a bigger one, I needed a different H tag to explain that to the crawlers.

Web performance refers to the speed at which web pages are downloaded and displayed on the user's web browser - Wikipedia’s definition.

Performance is the part where web developers and SEOs work meet. Ever since the worldwide mobile internet use surpassed desktop in 2016, speed has been an increasingly important ranking factor. We are now at a point where having a fast-loading website is essential to provide a great user search experience. And Google being Google, values users’ behavior so much, so they found a way to mix and measure UX and performance. I am talking about Page Experience signals and Core Web Vitals.

Page Experience

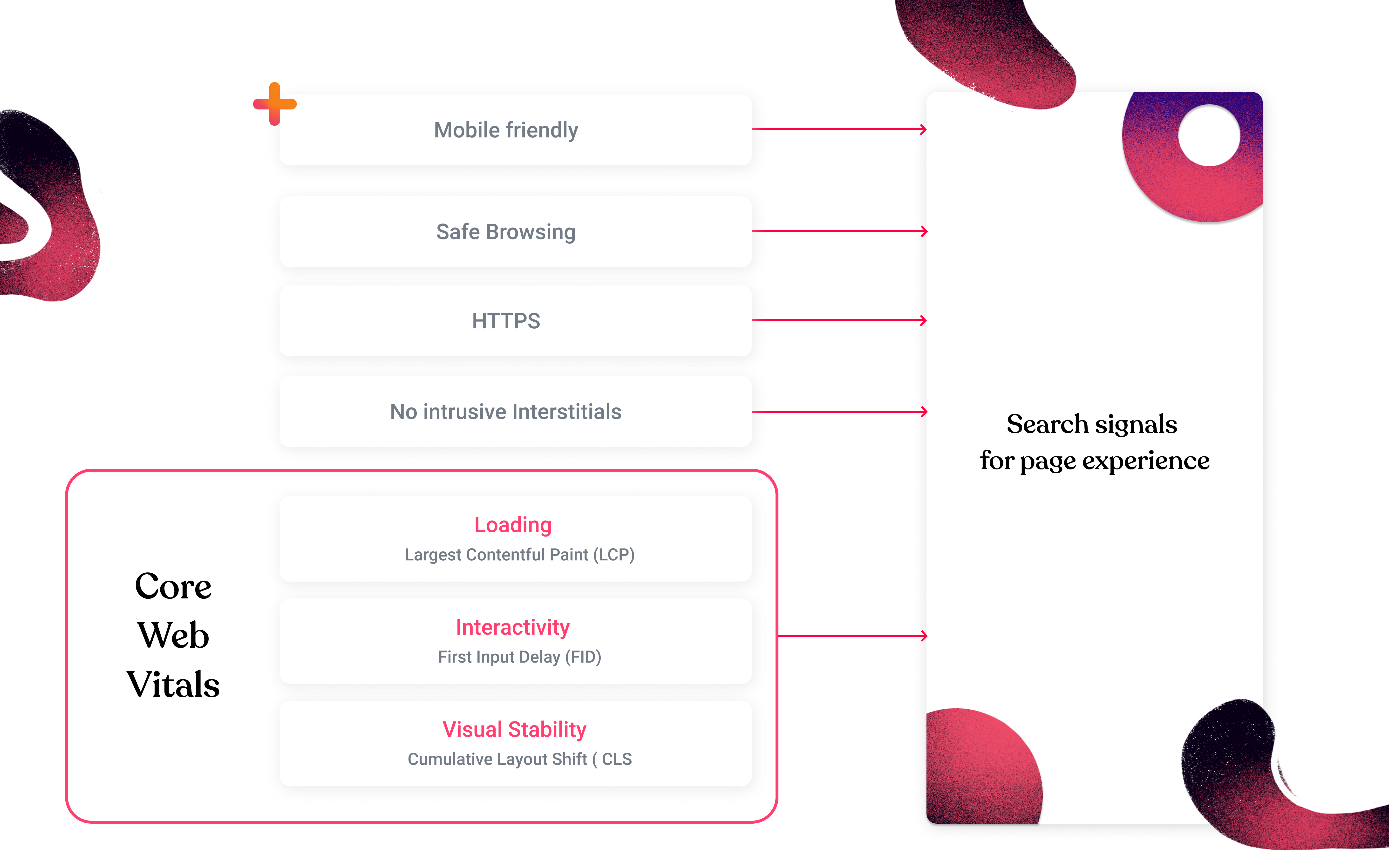

Page experience is a set of signals that measure how users perceive the experience of interacting with a web page beyond its content. The following signals are essential for delivering a good page experience according to Google:

Mobile-friendly. Are we still talking about this? Having a responsive design adjustable to the screen is not a fancy option you can have on your website. It is a necessity from both users’ and search engines’ perspectives. Use Googles’ Mobile-Friendly Test or check your Google Search Console under Enhancements > Mobile Usability for problems.

With Google introducing mobile-first indexing for all websites, which starts March 2021 effectively, you need to make sure that your website is not only shown perfectly on multiple screens but has an easily accessible mobile version that’ll be crawled and indexed by Googlebot.

Safe-browsing. Keeping your website safe for browsing is hugely important. In that sense, be sure to scan all the website files to find and remove malicious software and monitor and regularly update all of your website parts.

For WordPress users, that means make sure WP core install, plugins, and themes are updated regularly and known and potential server security issues are dealt with as soon as possible.

The best way to check if there are any safe-browsing issues is to use Google Search Console under Security & Manual Actions.

Well, having a Jamstack built website means a clear separation of services. The front end and back end are decoupled, and you rely on APIs to run server-side processes. This provides you with a much smaller surface prone to malicious attacks.

HTTPS. Users’ privacy and security come first. HTTPS helps prevent anyone from tampering with the communication between your website and your user’s browser. HTTPS is already a ranking signal since 2014. However, giving it a new role as a part of page experience signals emphasize its importance.

Two things you have to think about here: Is your website served over HTTPS? And does your website correctly redirect to HTTPS? So first, if you haven’t done so already, enable HTTPS on your server asap. Use tools like SEMRush or Ahrefs or Screaming Frog SEO Spider to check if redirects from HTTP to HTTPS are implemented correctly.

Intrusive Interstitials. While nobody likes pop-ups, it is not about not using them. It is about using them responsibly. That means you have to make sure pop-ups and interstitials (like the one you might use for age verification, cookie usage, GDPR compliance, etc.) don’t block websites content completely (especially on mobile screens), don’t cause layout shifts, and are not behaving annoyingly appearing on each page and on every action a user makes. Check out the further reading section for examples.

Core Web Vitals

The goal / the idea of Core Web Vitals is to provide you with sound, measurable proxies for Real User Experience/Metrics (RUM). To provide you with footing when it comes to improving your website performance and UX.

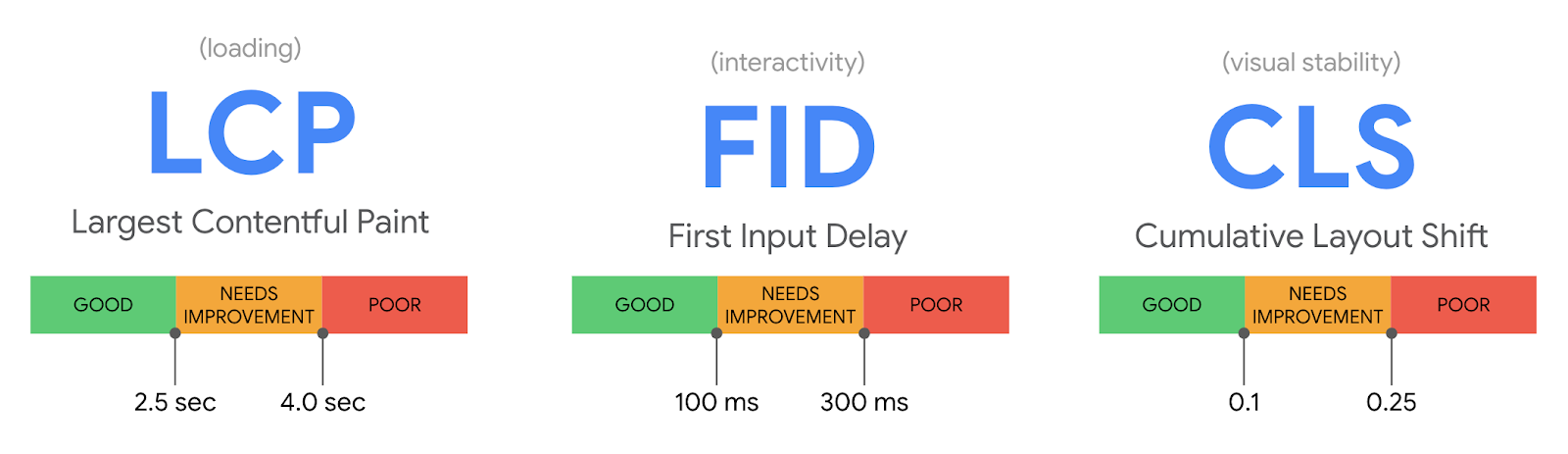

CWV are three factors defined as loading performance, interactivity, and visual stability of the page measured by three metrics LCP, FID, and CLS.

Largest Contentful Paint (LCP) measures the loading speed, i.e., how long it takes for the largest above-the-fold element to show on the screen. In most cases, this is a hero image/video or the main block of text on the page.

First Input Delay (FID) measures a page's responsiveness, i.e., how long it takes for an element to respond when the visitor tries to interact with it (think of the ability to click a link or fill a form).

Cumulative Layout Shift (CLS) measures how stable your page layout is while it loads. Do you know when you enter a page and then suddenly text that was previously in the middle of a page shifts below? CLS scores measure the impact the shift has on your viewport.

To get a feel for your current website performance results, use our widget below.

Core Web Vitals

Check if your website passes the Core Web Vitals assessment.

bejamas.io passes Core Web Vitals assessment.

First Input Delay

Largest Contentful Paint

Cumulative Layout Shift

Time to First Byte

Based on the previous 28-day collection fetched from Google's Chrome UX Report.

Improvement of CWV depends on your stack, i.e., if the website is built on WordPress, Shopify, or is a custom web dev solution, for example. But, there are several useful in-depth articles on what you can do to improve your CWV (check our further reading section), offering general tips that you might take into consideration.

I won’t be going into depth explaining those here. However, check what we did for one of our clients, BACKLINKO, how we overhaul the code to improve CWV scores. Learning from the examples of others has always been one of the best ways to learn.

The impact of Page Experience signals on

While Google already made it very clear that these UX ranking signals will not be stronger than content-related signals, we don’t know the actual weighting they will carry with them. Guess we’ll find that out soon (May 31st, 2021).

But what we do know, or rather what is said, is that only the minimum threshold for all Core Web Vitals must be met to benefit from the associated ranking signal. This comes on top of the user-perceived enhancement of your website that can impact bounce rate, time on page, number of sessions, etc.

Remember that any changes you make to your CWV improvement will take at least 28 days to fully reflect in Google’s data. This means the time to act is now!

The bottom line is that while improving Core Web Vitals may potentially have a positive impact on your search engine ranking, and it will definitely have a positive impact on your user experience!

And user experience (UX) is everything today. Page experience updated is all about giving a share of voice to UX in search algorithms. This fact alone makes spending time on improving your Core Web Vitals a time well spent.

What about performance, Core Web Vitals, and Jamstack?

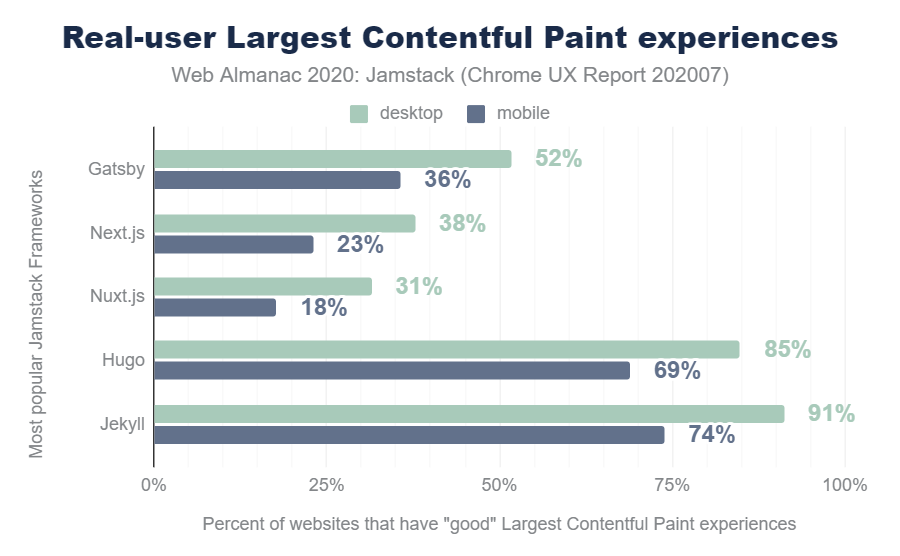

One of the most significant advantages that Jamstack websites have over others is better performance. In case you didn’t know, with Jamstack, the HTML files are prebuilt and served over the CDN instead of being rendered on the server. This, in turn, provides faster DOM-ready and full-page load times.

Take a look at the real-world Core Web Vitals statistics of the top-five Jamstack static site generators (Web Almanac 2020: Jamstack).

Or check this website (fancy animation and all) Lighthouse performance score in our widget above.

Not bad, right?

Our case studies showcase improvements we’ve made for our clients with Jamstack. If you have a project at hand that’s perfect for Jamstack or are looking to move your website to a more performant stack, let’s talk.

CLICK HERE to schedule a 1-on-1 talk and learn more about what we can do for you and your business.

What else can you do for your website to influence performance?

There are a couple of other things you should keep an eye on and make sure they're done right to help your website perform better.

Performance budgets

In case you are starting from scratch with a new website or are planning a redesign, be mindful of performance budgets. The purpose and the overall idea behind it is to set goals for your web build early on so that you can better balance performance issues without harming functionality or your user experience.

It helped us immensely during our website rebuild (you can check out Bejamas case study for more info). If you decide to go this road, start your planning with a Performance Budget Calculator.

URL Structure, site structure, and navigation

Having a clear URL and website structure, internal linking, and navigation influences how your website is perceived from users’ and crawlers’ points of view. The importance, impact, and complexity of website structure grow with the size of your website. There are a couple of general rules you should stick by.

Google suggests that the fewer clicks it takes to get to a page from your homepage, the better it is for your website.

Planning your website structure alongside keyword research can help you increase your website’s authority and spread it efficiently and evenly over your pages, raising your chances to appear in search results for all of the desired keywords.

A clear website menu with links to your categories, keywords, and main pages is a must. Be mindful of internal linking, i.e., link only to topic-related posts in topic-related paragraphs. Avoid having so-called orphaned pages that aren’t linked from any other page of your website.

Finally, use shorter, keyword-oriented URLs with hyphens to break up words for readability. Keep in mind that the URL should describe the content of the page as clearly as possible.

JavaScript

Reduce the amount of JavaScript on your website. It is as simple as that. While having JS adds up to your websites’ functionality, it can take a toll on your website performance depending on how much you use it.

In the new Core Web Vitals world, the amount of actual execution time of your JS influences First Input Delay (FID) the most.

In general, delay or remove third-party scripts. Improve your JS performance and defer non-critical scripts when you can. Always keep JS code below your main content, as this won't degrade the user experience. You can use Google Tag Manager to simplify things with custom JS for example.

Images

One of the most significant savings in overall page size and page load speed comes from optimizing images. First, you can utilize lazy loading.

Second, make use of WebP (like we did here) or AVIF image formats, both designed to create images that are better optimized and reduced in file size than JPGs (or PNGs).

That, in turn, makes for a faster website. Optimizing and compressing images and serving them from CDNs can help you get better Largest Contentful Paint (LCP) scores. Keep in mind there are a web design and UX elements behind image optimization as well. It is not just a question of simple image resizing.

Most static site generators are trying to provide you with a native image processing solution. If you use Gatsby, use the gatsby-image package designed to work seamlessly with Gatsby’s native image processing capabilities powered by GraphQL and Sharp. It helps you with image optimization, but it also automatically enables a blur-up effect and lazy loading images that are not currently on screen. Alternatively, you can use the new gatsby-plugin-image (currently in beta), which improves LCP and adds AVIF support.

Since version 10.0.0, Next.js has a built-in Image Component and Automatic Image Optimization. Images are lazy-loaded by default, rendered in such a way as to avoid Cumulative Layout Shift problems, served in modern formats like WebP when the browser supports it, and optimizes on-demand, as users request them.

Hugo users can apply this shortcode for resizing images, lazy loading, and progressive loading. Alternatively, you can use an open-source solution like ImageOptim and run it in your images folder. Finally, Jekyll users can do something like this here or set up Imgbot to help you out.

Don’t obsess over performance metrics but be mindful of them. For example, if search results for your niche/topic/keyword are packed with pages with videos and fancy animations, you can bet performance scores are an issue for most (I’m talking about mid-range numbers). But that does not mean your all text and images page with high-performance scores would rank well. And chances are it would not convert the targeted audience well either. Why? The ranking is a multifactor game, and performance is just a piece of this puzzle.

Indexing and crawlability

All the performance efforts and awesomeness of your content would mean nothing if search engines can’t properly index and crawl your website. You see, allowing search bots to crawl your website is one thing. Making sure bots can crawl and discover all of the essential pages while staying away from those you don’t want them to see is something else.

Robots.txt and XML sitemap

robots.txt file provides search bots with information about the files and/or folders you want or don’t want them to crawl. It can help you keep entire sections of a website private (for example, every WordPress website has a robots.txt file that prevents bots from crawling the admin directory). It can also be used to prevent your images and PDFs from being indexed or your internal search results pages from crawling and showing up in search engine results.

Make sure your robots.txt file is in a website's top-level directory, indicate the location of your sitemaps, and do not block any content/sections of your website you want to be crawled.

On the other hand, a sitemap is a file in XML format that provides crawlers with valuable information about the website structure and website pages. It tells the crawler which pages are important to your website, how important they are, when was the last time the page was updated, how often it was changed, are there any alternate language versions of the page, etc.

A sitemap helps search engine crawlers index your pages faster, especially if you have thousands of pages and/or a deep website structure. Once you make a sitemap, use Google Search Console to let the big G know about it.

Gatsby users can make use of plugins to create robots.txt and sitemap.xml automatically. Jekyll users can quickly generate sitemaps by using the sitemap plugin or manually following this tutorial. As for robots.txt, just add a file to the root of your project.

Hugo ships with a built-in sitemap template file, while for robots.txt, Hugo can generate a customized file in the same way as any other template. If you are using Next.js, the easiest and most common way is to generate sitemap and robots.txt during the build with a solution like this one.

Duplicate content, redirects, and canonicalization

We all want Google to recognize our content as the original one. But sometimes that can be a problem. When you have a single page accessible by multiple URLs (via HTTP and HTTPS) or share your original post on Medium and other blog-like platforms, or when different pages have similar content.

What are the problems, and what can you do in those cases?

The practice of having the same or slightly different content on a couple of pages or websites can be considered as duplicate content. There is no one-size-fits-all to the question of how similar content needs to be marked as a duplicate. The answer varies and depends on Google and other search engines to interpret it. For example, in eCommerce websites, you are bound to have the same content on multiple item pages, but that content is rarely labeled as duplicated.

However, if you plan on the deliberate use of the same content over multiple pages and/or domains, you are most likely damaging original pages/websites rather than helping them.

Why? Well, you are making it hard for search engines to decide which page is more relevant for a search query. If you don't explicitly tell Google which URL is original/canonical, you might end up getting a boost for the least expected page because Google will choose for you.

There are a couple of ways to deal with duplicated content, depending on the situation.

If duplicated content appears on one or a couple of internal pages, the best way is to, of course, rewrite the content. But if the case is that you are covering the same topic/keyword/product, think about setting up a 301 redirect from the duplicate page to the original page. URL redirection is a practice that helps you inform search engines of the changes you make to your website structure as well.

For example, if you decide to change your page’s URL structure but would like to keep all the goodies backlinks bring with them, with 301 redirects, you are proclaiming a new URL as the successor of the previous one.

In case you run your website on Netlify, you can easily configure redirects and rewrite rules in a _ file which you add to the root of the public folder. Similarly, if you have a project on Vercel, configure redirects in vercel.json file in the root of your directory like this. Amazon S3 users, for example, can set redirects like this.

Another way to deal with duplicated content is by using the rel=canonical attribute in your link tag:

There are two ways you can use it. With the above code, you are pointing search engines to the original canonical version of the page. It means that the one crawler is visiting at the moment should be treated as a copy of a specified URL.

Or use it as a self-referential rel=canonical link to the existing page, i.e.:

In both cases, the canonical attribute ensures that the right page or the preferred version of the page is indexed.

Gatsby, for example, has a simple plugin gatsby-plugin-canonical-urls, which sets the base URL used for canonical URLs for your website. If you are using Next JS, you can use a package called next-absolute-url or opt-out for Next SEO, a plugin that makes managing your SEO easier in Next.js projects.

Hugo supports permalinks, aliases, link canonicalization, and multiple options for handling relative vs. absolute URLs, as explained here. One of the possible Jekyll canonical URLs solutions can be found here.

Structured data

Google and other search engines use Schema.org structured data to understand your page’s content better and allow your content to appear in rich results.

Correct implementation of structured data might not influence your ranking directly, but it'll give you a fighting chance for appearance in around 30 different types of rich results that are powered by schema markup.

Making appropriate structure data is pretty straightforward. Check out schema.org for schemas suitable for your content. Use Google’s Structured Data Markup Helper to guide you through the coding process or a simple Schema Markup Generator here.

With structured data being one of the ways you can provide Google (and other search engines) with detailed information about a page on your website, the biggest challenge is nailing the type you'll be using on a page. The best practice is to keep focused and generally use a single top-level Type on a given page.

The use of structured data helps the most with the search queries related to ecommerce, recipes, and jobs, for example, i.e., queries for which search results show more than just title and description. Take a look at this post from MOZ here. It'll help you understand which structure data is right for you.

There are two ways you can handle structured data within your Jamstack. You’ll be happy to know that most headless CMSs provide you with the tools to manage structured data, page by page, in the form of defining custom components. Or you can add schema as a part of a template you are using.

Crawl budget

Crawl budget can be best described as the level of attention search engines give to your site. If you are running a large website with tons of pages prioritizing what to crawl when to crawl it, and how many resources you can allocate to crawling becomes hugely important, and it is managed with a crawl budget. Not addressing it properly can lead to important pages not being crawled and indexed.

Unless you are running a website with a considerable number of pages (think more than 10k+ pages) or you’ve recently added a new section with tons of pages that need to be crawled, I’d suggest you leave your crawl budget on auto-pilot.

Still, it is good to know there are a couple of things you can do to maximize your site’s crawl budget. Most of them like improving website performance, limiting redirects and duplicated content, setting good website structure and internal links we’ve already mentioned.

Technical SEO in a nutshell

Today SEO is a collective effort of devs, UX, product, and SEO people. A balancing act between potential audience, search engines, and business goals and expectations. If done right, it isn't just a strategic way to grow website traffic. It can improve UX, conversion, and accessibility at the same time.

With speed and performance getting more attention from both users and search engines, having a reliable architecture backing your website performance has become necessary.

Jamstack may be a new way of building websites, but it offers an impressive list of advantages over traditional stacks on top of performance and SEO benefits. Security and scalability being among the top ones.

Is this the end of our Jamstack SEO guide? Not by a long shot. This is just the first part. In part two, I’ll be talking about content and on-page and off-page optimization + I’ll share a .pdf of this complete guide with BONUS MATERIAL, i.e., case studies, to complement the whole guide.

Ready to move on? Click here for PART II: Content SEO