Knowledge Hub

Layer0

Layer0 Review and Features

Written by Ashita Gopalakrishnan

Last update: 6/7/2024

| Feature | Layer0 | |

|---|---|---|

Infrastructure What runs under-the-hood. | N/A | |

Continuous Integration & Continuous Delivery (CI/CD) | ||

Continuous deployment Imagine an automatic update system for your website. This feature automatically deploys any changes you make to your code (usually from Git) to your live website. | ||

Automated builds from Git An easy integration with popular Git repository hostings like Github, GitLab and Bitbucket. | ||

Instant rollbacks to any version Easy way to promote any previous build to production without the need to revert commits or data changes. | ||

Site previews for every push New build with a unique URL for every commit and pull request. | ||

Compatible with all Static Site Generators | ||

Notifications Events triggered on successful, canceled, or failed build. | Email | |

Team Management Create team account and invite your teammates to the project. | ||

Custom domains Bring your own domain and connect to the project. | ||

Automatic HTTPS SSL certificate generated automatically. | ||

Rewrites & Redirects HTTP 301 or 302 redirects from one URL to another. Rewrites work similar to reverse proxy and allow to send user to different URL without modifying the original URL. | ||

Password Protection An easy way to restrict access to the website for users who don't have password. Useful if you work on a new site and want to keep it in secret. | ||

Skew Protection Skew Protection ensures client and server versions stay synchronized during deployments, preventing compatibility issues. | N/A | |

Free Tier | ||

Websites Number of projects you can have in Free Tier on one account. | N/A | |

Build Minutes The amount of time your build scripts can run per month. | N/A | |

Concurrent builds How many builds can be run at the same time? | 1 | |

Bandwidth The amount of data that is transferred to or from the CDN. | 100GB /month | |

Team Members The number of users (team members) that can be added to the CMS. | 3 | |

Build Time Per Deployment Build have to finish within the limited time. If not it will fail due to timeout. | N/A | |

Build Memory Limit (in MiB) The amount of memory that is allocated by system to build process. Some operations like image processing are expensive and might require more memory. For Node.js it is max-old-space-size setting. | N/A | |

Paid Plans | ||

Max team members before switch to custom pricing | N/A | |

Git contributors have to be Team Members In order to triger build, Git contributor has to be a paid team member. | N/A | |

Serverless | ||

Serverless Functions (API) Small pieces of code that run on-demand without managing servers, typically used for API endpoints. | JavaScript | |

Edge Functions Serverless functions that run closer to the user, reducing latency and improving performance. | N/A | |

Background Functions Code that runs in the background on the platform to perform tasks that don't require immediate user interaction. | ||

CRON Jobs Schedule tasks to run automatically at specific times or intervals. Useful for automating repetitive website maintenance tasks. | ||

Developer Experience | ||

CLI Command Line Interface tools that allow developers to perform tasks and manage the tool via the command line. | ||

Extensions Additional plugins or add-ons that can enhance the functionality of the platform. | ||

Environment Variables Secret configuration settings for your website that change based on where it's deployed (dev, staging, production). | N/A | |

Build Logs Track the progress and results of website builds for troubleshooting | ||

Build Canceling Ability to stop a build process that is currently running. This frees up resources and lets you make changes to your website faster by stopping builds you don't need anymore. | ||

Platform Built-in Products | ||

Analytics Tools for tracking and analyzing website traffic. | Real-time Insights. User Experience Scores. Web Vitals. Audiences. | |

Authentication Services for managing user logins and authentication. | ||

Database Managed database services. | ||

Asset Optimizations Tools for optimizing images, CSS, JS, etc. | ||

A/B Testing Lets you test different versions of your site by directing traffic to each variant, helping you optimize user experience based on performance metrics. | git branch-based split testing | |

Form Handling Services for managing form submissions. | ||

Data Storage Solutions for storing and managing data. | ||

Push Notifications This allows your website to send real-time alerts or updates to visitors who have opted-in. | ||

Machine Learning | ||

Security & Compliance Offerings | ||

Two-factor authentication Adds an extra layer of security when logging in. | ||

Team Logs Tracks user activity within the platform for better accountability. | ||

SOC2 Service Organization Control 2 compliance for managing customer data. | ||

ISO27001 International standard for information security management. | ||

GDPR Compliance with the General Data Protection Regulation for handling personal data. | ||

Sustainability | ||

Carbon Neutral | N/A | |

Carbon-free Energy | N/A | |

Integrations | ||

Integrations Connecting your deployment platform with external services like headless content, commerce, databases, and more. | N/A | |

Custom build-system integrations Allows you to connect your own build tools and processes with the deployment platform. | N/A | |

Support with self-hosted instances of git | N/A | |

API mesh API Mesh allows you to combine multiple APIs into a single unified API, simplifying data fetching and integration across different services and backends. | N/A | |

Deploy Preview feedback integrations Enables team members and stakeholders to comment directly on preview deployments. | N/A | |

Edge Functions integrations | N/A | |

High Performance Build Memory and CPU | N/A | |

Native Build Plugins | N/A | |

There’s no doubt that Content Delivery Networks (CDNs) helped in optimizing network congestion effectively, thanks to distributed servers around the world that provide faster delivery of content to users. Launched by Akamai in 1998, CDNs still serve their purpose today with a value that is expected to reach nearly 28 billion by 2025. Since CDN handles high volumes of data, they are also responsible for half of the internet traffic globally. Keeping this in mind, CDNs offer bandwidth cost and protection against DDoS attacks.

With the various benefits it has to offer, one might think there are no issues when it comes to CDN. However, traditional CDNs are not evolved to support dynamic web pages since they were built to serve only static web pages. Gone are the days when websites dealt with only lightweight, static contents. With an increasing shift in the way content has been viewed, CDNs not only have to deliver the content quickly but at the same time be accessible on all mobile devices. This could pose as a challenge on traditional CDN as they have to evolve to adapt to these changes.

Modern websites have become increasingly progressive dealing with complex databases, JavaScript files and third-party APIs.

What is Layer0?

Acquired by Limelight, Layer0 (formerly known as Moovweb XDN) is an integrated cloud platform used to develop, deploy and monitor the performance of a website. Unlike a traditional CDN that focuses on static content, Layer0 was built to handle dynamic websites – as it comes with a CDN that caches 95% of the dynamic data.

With Layer0, you don’t have to worry about slow displays with the median LCP on the network taking only 399ms. Layer0 handles millions of pages while offering the speed of a static website.

Since Layer0 deals with monitoring content traffic, every enterprise customer receives protection against DDOS attacks. Any security exploit is handled using Layer0 WAF, a web application firewall that blocks malicious traffic that is either coming from or targeted towards your application. Layer0 complies with a set of stringent privacy regulations and by default deals with HTTPs protocols.

The firewall includes a Bot Management option where a set of managed bots would only allow legitimate web crawlers to have access to the content while keeping traffic secure from potential attackers.

Supporting multiple frameworks including popular ones such as React and Next.js , Layer0 is a suitable option for deploying blazing fast Jamstack applications as it leverages Layer0’s edge network to ensure a seamless experience.

What is EdgeJS in Layer0?

EdgeJS is the first of its kind CDN-as-a-JavaScript that caches any content based on URL path rather than the traditional way of caching static content on the Asset URL. Usually most CDNs cache asset urls which are responsible for slowing down the speed of a website. EdgeJS predicts what kind of data a user might tap at with the help of EdgeJS service worker that prefetches data into the browser even before the user needs it.

Through EdgeJS approach, you can control the routing and caching from your code base with the routing logic being thoroughly tested and reviewed just like the rest of the application code.

Traditionally, you would need to deploy to staging to test out CDN rules.That isn’t the case in Layer0 as CDN rules can run locally on a developers machine. Just like how the rest of the code goes through a development cycle, EdgeJS can be tested on a local machine and has a live log for every URL before being pushed into production. Since the entire team can provide feedback on the preview URL, it reduces the chance of redos and provides an efficient mechanism for error recovery. This is highly useful in scenarios such as:

- Iteratively migrating a site from a legacy monolithic architecture to an API-based architecture with a modern JavaScript framework

- Testing the site’s performance with the Edge Experiment module using split testing methods such as A/B testing.

- Setting up multiple backends on one domain a.k.a reverse proxy.

- Solving the problems of caching GraphQL APIs by adding GraphQL parsing and support for HTTP POST format.

EdgeJS allows you to keep your existing CDN or replace it completely with its enhanced capabilities without slowing down the performance of your website.

How does Layer0 work?

Unlike a traditional CDN, Layer0 provides instant page load by prefetching data even before the customer taps the content. The modern CDN-as-a-JavaScript can either replace or enhance your CDN as it has a 95% cache hit ratio for dynamic content when compared to a traditional one with only 10-15% cache hit ratio on limited static assets.

Since Limelight Network has the second largest global network of PoPs, it is able to serve data from the edge that is closer to the user’s location .Prefetching of content into the browser leverages the L1 Edge Cache and the L2 Shield cache. Only prefetched requests hit the edge network to ensure that the origin server is not overwhelmed with unnecessary data.

L1 Edge Cache: The prefetched content passes through the first layer at Edge that has a network of nearly 100 global PoPs to ensure a seamless user experience with minimal latency.

L2 Shield Cache: To maximize global cache hit ratio and reduce traffic load on your s

ervers, the requests get cached in this layer.This ensures that multiple requests for a particular URL fetches the same response as the results are retrieved from service workers.

Layer0 CDN Features

The Layer0 CDN comes with a ton of features that can be incorporated when deploying a Jamstack application. Layer0 supports all major frameworks and serverless JavaScript to handle Server Side Rendering(SSR). Major features include Caching, Data Prefetching, Routing, Performance Monitoring, Security and Deployment.

Layer0 CLI

Layer0 CLI can be easily installed via npm or yarn.

npm i -g @layer0/clior

yarn global add @layer0/cliPrefetching

Layer0 prefetches web pages and APIs without adding any extra load on the infrastructure. By making use of Edge caching, Layer0 will never make a request to the origin server and only prefetches requests from the edge cache. With prefetching, customers will receive an enhanced user experience as network calls have already completed before the page transition does.

In Layer0, once the service worker is set up, we can prefetch a url from the edge by using Prefetch component from @layer0/react.

import { Prefetch } from '@layer0/react'

function MyComponent() {

return (

<Prefetch url="">

<a href="pages/about"> About Page </a>

</Prefetch>

)

}

An interesting fact when it comes to prefetching is that these requests are given the lowest priority to make sure that critical requests do not get blocked. Critical requests such as API calls, images and scripts are given higher priority as compared to prefetching web pages.

While data of JSON API and HTML document is prefetched by default, Layer0 also lets you to prefetch important assets such as images,CSS and JavaScript from within those returned responses, making it possible to have all page data present before the page transition occurs.This is known as Deep Fetching.

To add Deep Fetching in your project in addition to prefetching, add the DeepFetchPlugin to the service worker.

DeepFetching

import { Prefetcher } from '@layer0/prefetch/sw'

import DeepFetchPlugin from '@layer0/prefetch/sw/DeepFetchPlugin'

new Prefetcher({

plugins: [

new DeepFetchPlugin([

{

selector: ‘img.product-media’,

maxMatches: 1,

attribute: ‘src’,

as: ‘image’

},

]),

],

})The above example is a clear demonstration of adding the PreFetchPlugin when an image with a CSS class named product-media needs to be prefetched.

Caching

Layer0 gives you the ability to cache content on your page URL using EdgeJS. (Note: Before setting a cache response for a website, you need to configure an environment.)

To set a cache response from the browser and edge, you will need to make use of the cache function in the routers callback. For ensuring 100% success in cache hit rates, you need to leverage the maxAge and stateWhileRevalidate value in the edge key as shown in the example below.

Example:

routes.js

const { Router } = require("@layer0/core/router");

const { frontityRoutes } = require("@layer0/frontity");

const PAGE_CACHE_TTL = {

edge: {

maxAgeSeconds: 60 * 60,

staleWhileRevalidateSeconds: 24 *60 * 60

},

browser: {

maxAgeSeconds: 0,

serviceWorkerSeconds: 60 * 60

}

};

module.exports = new Router()

.get("/", ({ cache }) => cache(PAGE_CACHE_TTL))

.get("/about-us", ({ cache }) => cache(PAGE_CACHE_TTL))

.use(frontityRoutes);The maxAge value will cache an asset for a specified amount of time whereas the staleWhileRevalidate is an additional cache buffer limit past maxAge that not only returns content to the client but also makes a request to the origin to see if new content is available or not.

Edge Experiment

Layer0 lets you perform split testing (also called Edge Experiments) by conducting split tests on the same website which allows A/B testing or splitting traffic between multiple variations of your website.

For example, if you want to run split tests between a new website and a legacy website , you would need to add a backend in the layer0.config.js file.

layer0.config.js

module.exports = {

backends: {

legacy: {

domainOrIp: 'legacy-reactstorefront.io',

},

new: {

domainOrIp: 'reactstorefront.io',

},

},

}Once the backend is created, a destination is added for each router in the routes.js file.

routes.js

const { Router } = require('@layer0/core/router')

module.exports = new Router()

.destination(

'legacy_experience',

new Router()

.fallback(({ proxy }) => proxy('legacy')),

)

.destination(

'new_experience',

new Router()

.fallback(({ proxy }) => proxy('new')),

)To deploy the site, run the following command:

0 deploy --environment={ my production environment name }You will need to create a separate environment and then configure the rules for split testing in the Layer0 console by setting the split percentage for each destination.

Layer0 routes a request by assigning a random number to all users between 1 to 100 at the edge by using a cookie called layer0_bucket before the user request hits the cache.

To identify the split test experience on your browser, Layer0 automatically assigns a cookie called layer0_destination to the chosen destination.

For reporting business metrics, Layer0 is compatible with a variety of A/B testing tools that support server-side integration such as Optimizely, Google experiments and Optimizer.

Tracking Core Web Vitals with Layer0

Core Web Vitals is an important metric to understand the performance of a website. Using Layer0 to track Core Web Vitals, one can see the changes to a website in real time as well as find out how each page performs without the need for hosting it on Layer0.

To track Core Web Vitals, Layer0 provides a configuration guide depending on the method you choose such as inserting a script tag or using it along with a tag management system like Google Tag Manager. Layer0 comes with a Core Web Vital library that can be installed via a package manager.

Example of using a Script Tag along with Google Tag Manager

<script>

function initMetrics() {

new Layer0.Metrics({

token: 'your-token-here'

}).collect()

}

var rumScriptTag = document.createElement('script')

rumScriptTag.src = 'https://rum.layer0.co/latest.js'

rumScriptTag.setAttribute('defer', '')

rumScriptTag.onLoad = initMetrics

</script>Incremental Static Regeneration

Introduced by Next.js to the Jamstack community, ISR works by loading a static loading page when server-side rendering is in progress. With Layer0, you can support implementing ISG for any framework.

ISG for any framework can be enabled by configuring the Layer0 router to serve static rendered pages using the serveStatic method.

routes.js

router.get('/products/:id', ({ serveStatic, renderWithApp }) => {

serveStatic('dist/products/:id.html', {

onNotFound: () => renderWithApp(),

loadingPage: 'dist/products/loading.html',

})

})In the above example, under the serveStatic function, the options onNotFound and loadingPage are used to specify that Layer0 will serve a static loading page in case a request is not fulfilled while falling back to server-side rendering for future requests.

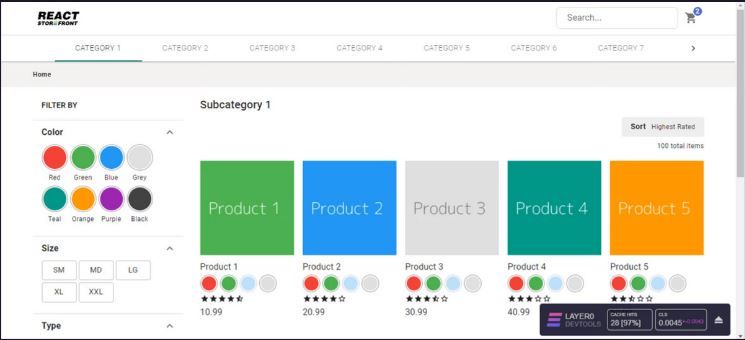

Layer0 Devtools

To understand how your site performs, install Layer0 Devtools by running the following command:

npm i -D @layer0/devtools

Layer0 Devtools provides statics about how a site interacts with Layer0 such as caching on the edge and browser, prefetching requests, the number of requests that were sent to the serverless layer in case of a cache miss at the edge and web vital information like LCP and CLS.

Layer0 Status Codes and Limits

Besides the standard HTTP status codes like 404 and 412, the Layer0 platform comes with its own response codes. Some of these codes with their description are mentioned below.

The Layer0 platform specifies request and response size limits when running a project.

Layer0 populates the server timing header to show how long a response took and whether it was a cache hit or miss. These values can easily be found on the devtool.

The following values are added to the server-timing header:

1. layer0-cache:desc=value- The value represents whether the page was served from the cache or not. It could be either of these three values:

- HIT-L1 indicates that the page was served from edge cache.

- HIT-L2 indicates that the page served from shield cache.

- MISS indicates a cache failure.

2. country: desc=country_code is a two letter code that indicates from which country the request was sent.

3. xrj: desc=route that is serialized as JSON.

Layer0 also has extensive debug headers to show the precise timing value for key components in the system.

Deployment

You can deploy your website on Layer through the command line or by making use of a CI platform.

Through CLI

To deploy a project to Layer0, run the following command on your CLI:

0 deploy

The CLI will output a URL after successfully deploying the website to Layer0. The name of the site is based on the name field in package.json however it can be overridden when using the --site option at runtime.

Layer0 creates a permanent and unique URL for each deployment based on the site name, branch name in source control and deployment number. This makes it easy for developers to find bug origins before making a pull request.

Through a CI/CD platform

A recommended option to deploy your app on Layer0 is to make use of any popular CI tools. To do that, you will need to create a deploy token from the Layer0 console. Add the --token option to CI script when deploying.

For example, consider deploying CI/CD configurations with the help of GitHub Actions free tier. The token key created from Layer0 console needs to be saved inside GitHub repository. For saving the deploy token you will need to follow these steps:

- Go to Settings in GitHub Project.

- Click on Secrets and add New repository secret. Save it as LAYER0_DEPLOY_TOKEN.

- In the development project create the .github folder. Inside this folder, create a folder named workflows and add the layer0.yml file which contains the necessary configurations to deploy on Layer0.

.gitlab-ci.yml

name: Deploy branch to Layer0

on:

push:

branches: [master, main]

pull_request:

release:

types: [published]

jobs:

deploy-to-layer0:

# cancels the deployment for the automatic merge push created when tagging a release

if: contains(github.ref, 'refs/tags') == false || github.event_name == 'release'

runs-on: ubuntu-latest

env:

deploy_token: ${{secrets.LAYER0_DEPLOY_TOKEN}}

steps:

- name: Check for Layer0 deploy token secret

if: env.deploy_token == ''

run: |

echo You must define the "LAYER0_DEPLOY_TOKEN" secret in GitHub project settings

exit 1

- name: Extract branch name

shell: bash

run: echo "BRANCH_NAME=$(echo ${GITHUB_REF#refs/heads/} | sed 's/\//_/g')" >> $GITHUB_ENV

- uses: actions/checkout@v1

- uses: actions/setup-node@v1

with:

node-version: 14

- name: Cache node modules

uses: actions/cache@v1

env:

cache-name: cache-node-modules

with:

path: ~/.npm # npm cache files are stored in `~/.npm` on Linux/macOS

key: ${{ runner.os }}-build-${{ env.cache-name }}-${{ hashFiles('**/package-lock.json') }}

restore-keys: |

${{ runner.os }}-build-${{ env.cache-name }}-

${{ runner.os }}-build-

${{ runner.os }}-

- name: Install packages

run: npm ci # if using npm for your project

# run: rm -rf node_modules && yarn install --frozen-lockfile # if using yarn for your project

- name: Deploy to Layer0

run: |

npm run deploy -- ${{'--branch=$BRANCH_NAME' || ''}} --token=$deploy_token \

${{github.event_name == 'push' && '--environment=default' || ''}} \

${{github.event_name == 'pull_request' && '--environment=staging' || ''}} \

${{github.event_name == 'release' && '--environment=production' || ''}}

env:

deploy_token: ${{secrets.LAYER0_DEPLOY_TOKEN}}Conclusion

What sets Layer0 apart is that it exposes the toolset to power sub-second websites.This truly makes a difference because a page delayed by even half a second has a massive effect on the performance of a website costing billions of dollars in sale per year. Layer0 does the hard work of delivering websites instantly with a cache hit ratio at 98% and 99.991% uptime.

An all-in-one platform, Layer0 is a suitable choice for not only deploying Jamstack websites within a few minutes but also testing out the CDN configuration locally. Build your edge logic with the world’s first JS-based CDN EdgeJS by testing at local and pre-production level before moving to production. For every feature branch, a preview URL is generated with an unlimited pre production environment where members from within the team can provide their assessment.

AWS Amplify

AWS Amplify Azure Static Web Apps

Azure Static Web Apps Begin

Begin Cloudflare Pages

Cloudflare Pages Deno Deploy

Deno Deploy Digital Ocean App Platform

Digital Ocean App Platform Firebase

Firebase GitHub Pages

GitHub Pages Heroku

Heroku Kinsta

Kinsta Layer0

Layer0 Netlify

Netlify Render

Render Vercel

Vercel